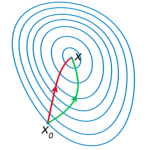

![]() Optical flow is the apparent motion of objects across multiple images or frames of video. It has many applications in computer vision including 3D structure from motion and video super resolution. In this article I illustrate one specific technique for calculating sub-pixel accurate dense optical flow by matching continuous image areas using optimization techniques over bilinear splines.

Optical flow is the apparent motion of objects across multiple images or frames of video. It has many applications in computer vision including 3D structure from motion and video super resolution. In this article I illustrate one specific technique for calculating sub-pixel accurate dense optical flow by matching continuous image areas using optimization techniques over bilinear splines.

Continue reading Optical Flow via Bilinear Spline Matching

Category Archives: Tutorial

Logistic Regression and Optimization Basics

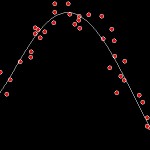

Logistic regression uses a sigmoid (logistic) function to pose binary classification as a curve fitting (regression) problem. It can be a useful technique, but more importantly it provides a good example to illustrate the basics of nonlinear optimization. I’ll show how to solve this problem iteratively with both gradient descent and Newton’s method as well as go over the Wolfe conditions that we’ll satisfy to guarantee fast convergence. These are the prerequisites for the upcoming neural network articles!

Logistic regression uses a sigmoid (logistic) function to pose binary classification as a curve fitting (regression) problem. It can be a useful technique, but more importantly it provides a good example to illustrate the basics of nonlinear optimization. I’ll show how to solve this problem iteratively with both gradient descent and Newton’s method as well as go over the Wolfe conditions that we’ll satisfy to guarantee fast convergence. These are the prerequisites for the upcoming neural network articles!Continue reading Logistic Regression and Optimization Basics

Classification Validation

Continue reading Classification Validation

Decision Trees and Forests

Decision trees make predictions by asking a series of simple questions. I’ll show you how to train one using a greedy approach, how to build decision forests to take advantage of the wisdom of crowds, and then we’ll be optimizing a rotation forest to build a fast version of one of the most accurate classifiers available.

Decision trees make predictions by asking a series of simple questions. I’ll show you how to train one using a greedy approach, how to build decision forests to take advantage of the wisdom of crowds, and then we’ll be optimizing a rotation forest to build a fast version of one of the most accurate classifiers available.Continue reading Decision Trees and Forests

Least Squares Curve Fitting

Today we’re talking about linear least squares curve fitting, but don’t be fooled by the name. I’ll show how to use it to fit polynomials and other functions, how to derive it, and how to calculate it efficiently using a Cholesky matrix decomposition. Continue reading Least Squares Curve Fitting

Today we’re talking about linear least squares curve fitting, but don’t be fooled by the name. I’ll show how to use it to fit polynomials and other functions, how to derive it, and how to calculate it efficiently using a Cholesky matrix decomposition. Continue reading Least Squares Curve Fitting Naive Bayes

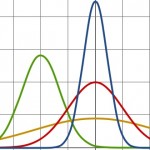

Assume a distribution and conditional independence, calculate means and standard deviations, and use it to make predictions. It’s about the simplest thing that qualifies as machine learning. I’m not really a fan, but we’ve got to start somewhere, right? Continue reading Naive Bayes

Assume a distribution and conditional independence, calculate means and standard deviations, and use it to make predictions. It’s about the simplest thing that qualifies as machine learning. I’m not really a fan, but we’ve got to start somewhere, right? Continue reading Naive Bayes What is Inductive Bias?

Inductive bias is the set of assumptions a learner uses to predict results given inputs it has not yet encountered. It’s also the name of this blog. Let’s talk about swans.

Inductive bias is the set of assumptions a learner uses to predict results given inputs it has not yet encountered. It’s also the name of this blog. Let’s talk about swans.Continue reading What is Inductive Bias?